Initial publication.

This commit is contained in:

commit

6d40170c78

49 changed files with 13633 additions and 0 deletions

2

.gitignore

vendored

Normal file

2

.gitignore

vendored

Normal file

|

|

@ -0,0 +1,2 @@

|

|||

_bringrss/*

|

||||

myscripts/*

|

||||

12

CONTACT.md

Normal file

12

CONTACT.md

Normal file

|

|

@ -0,0 +1,12 @@

|

|||

Contact

|

||||

=======

|

||||

|

||||

Please do not open pull requests without talking to me first. For serious issues and bugs, open a GitHub issue. If you just have a question, please send an email to `contact@voussoir.net`. For other contact options, see [voussoir.net/#contact](https://voussoir.net/#contact).

|

||||

|

||||

I also mirror my work to other git services:

|

||||

|

||||

- https://github.com/voussoir

|

||||

|

||||

- https://gitlab.com/voussoir

|

||||

|

||||

- https://codeberg.org/voussoir

|

||||

29

LICENSE.txt

Normal file

29

LICENSE.txt

Normal file

|

|

@ -0,0 +1,29 @@

|

|||

BSD 3-Clause License

|

||||

|

||||

Copyright (c) 2022, Ethan Dalool aka voussoir

|

||||

All rights reserved.

|

||||

|

||||

Redistribution and use in source and binary forms, with or without

|

||||

modification, are permitted provided that the following conditions are met:

|

||||

|

||||

1. Redistributions of source code must retain the above copyright notice, this

|

||||

list of conditions and the following disclaimer.

|

||||

|

||||

2. Redistributions in binary form must reproduce the above copyright notice,

|

||||

this list of conditions and the following disclaimer in the documentation

|

||||

and/or other materials provided with the distribution.

|

||||

|

||||

3. Neither the name of the copyright holder nor the names of its

|

||||

contributors may be used to endorse or promote products derived from

|

||||

this software without specific prior written permission.

|

||||

|

||||

THIS SOFTWARE IS PROVIDED BY THE COPYRIGHT HOLDERS AND CONTRIBUTORS "AS IS"

|

||||

AND ANY EXPRESS OR IMPLIED WARRANTIES, INCLUDING, BUT NOT LIMITED TO, THE

|

||||

IMPLIED WARRANTIES OF MERCHANTABILITY AND FITNESS FOR A PARTICULAR PURPOSE ARE

|

||||

DISCLAIMED. IN NO EVENT SHALL THE COPYRIGHT HOLDER OR CONTRIBUTORS BE LIABLE

|

||||

FOR ANY DIRECT, INDIRECT, INCIDENTAL, SPECIAL, EXEMPLARY, OR CONSEQUENTIAL

|

||||

DAMAGES (INCLUDING, BUT NOT LIMITED TO, PROCUREMENT OF SUBSTITUTE GOODS OR

|

||||

SERVICES; LOSS OF USE, DATA, OR PROFITS; OR BUSINESS INTERRUPTION) HOWEVER

|

||||

CAUSED AND ON ANY THEORY OF LIABILITY, WHETHER IN CONTRACT, STRICT LIABILITY,

|

||||

OR TORT (INCLUDING NEGLIGENCE OR OTHERWISE) ARISING IN ANY WAY OUT OF THE USE

|

||||

OF THIS SOFTWARE, EVEN IF ADVISED OF THE POSSIBILITY OF SUCH DAMAGE.

|

||||

148

README.md

Normal file

148

README.md

Normal file

|

|

@ -0,0 +1,148 @@

|

|||

BringRSS

|

||||

========

|

||||

|

||||

It brings you the news.

|

||||

|

||||

Live demo: https://bringrss.voussoir.net

|

||||

|

||||

## What am I looking at

|

||||

|

||||

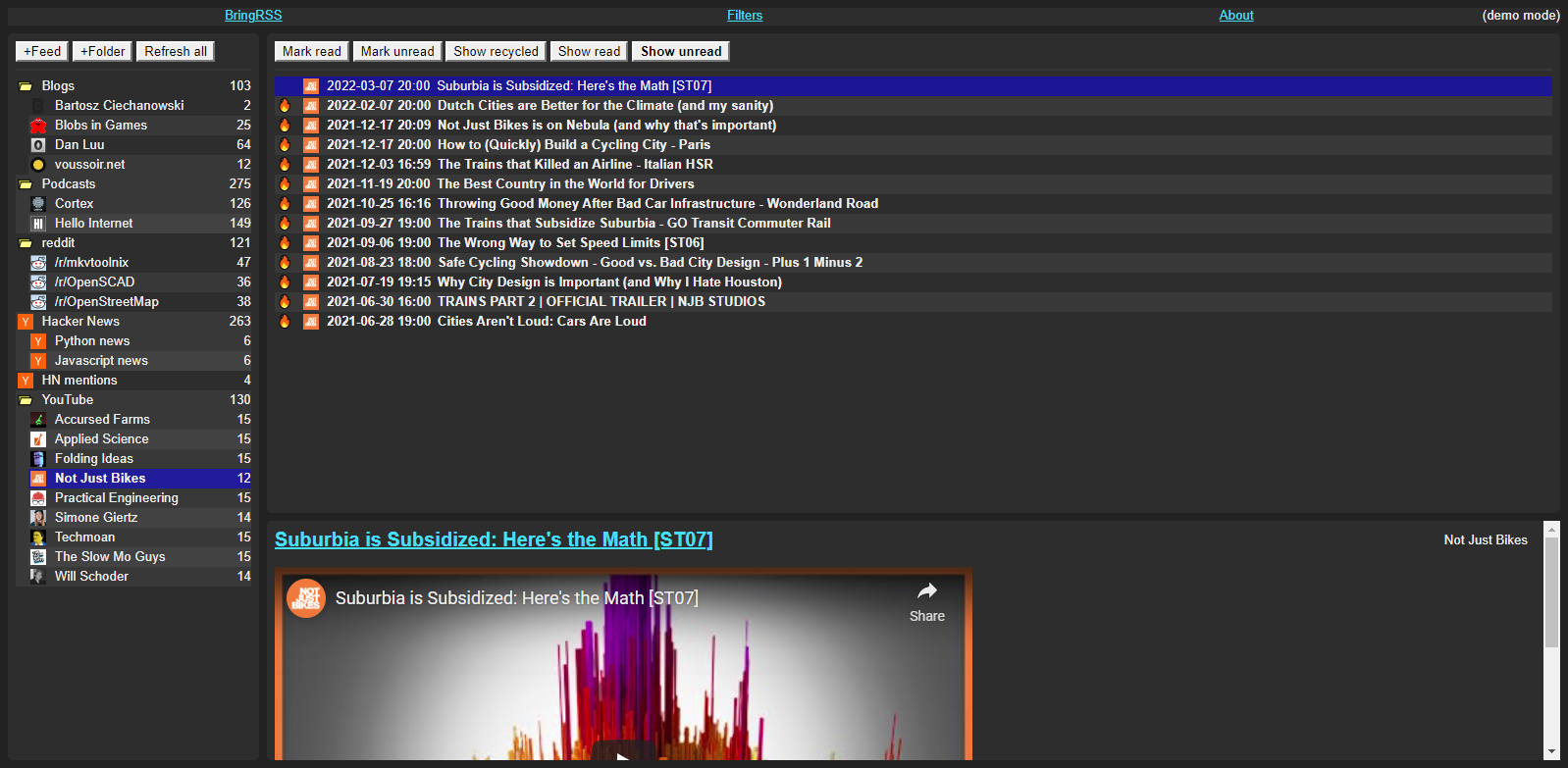

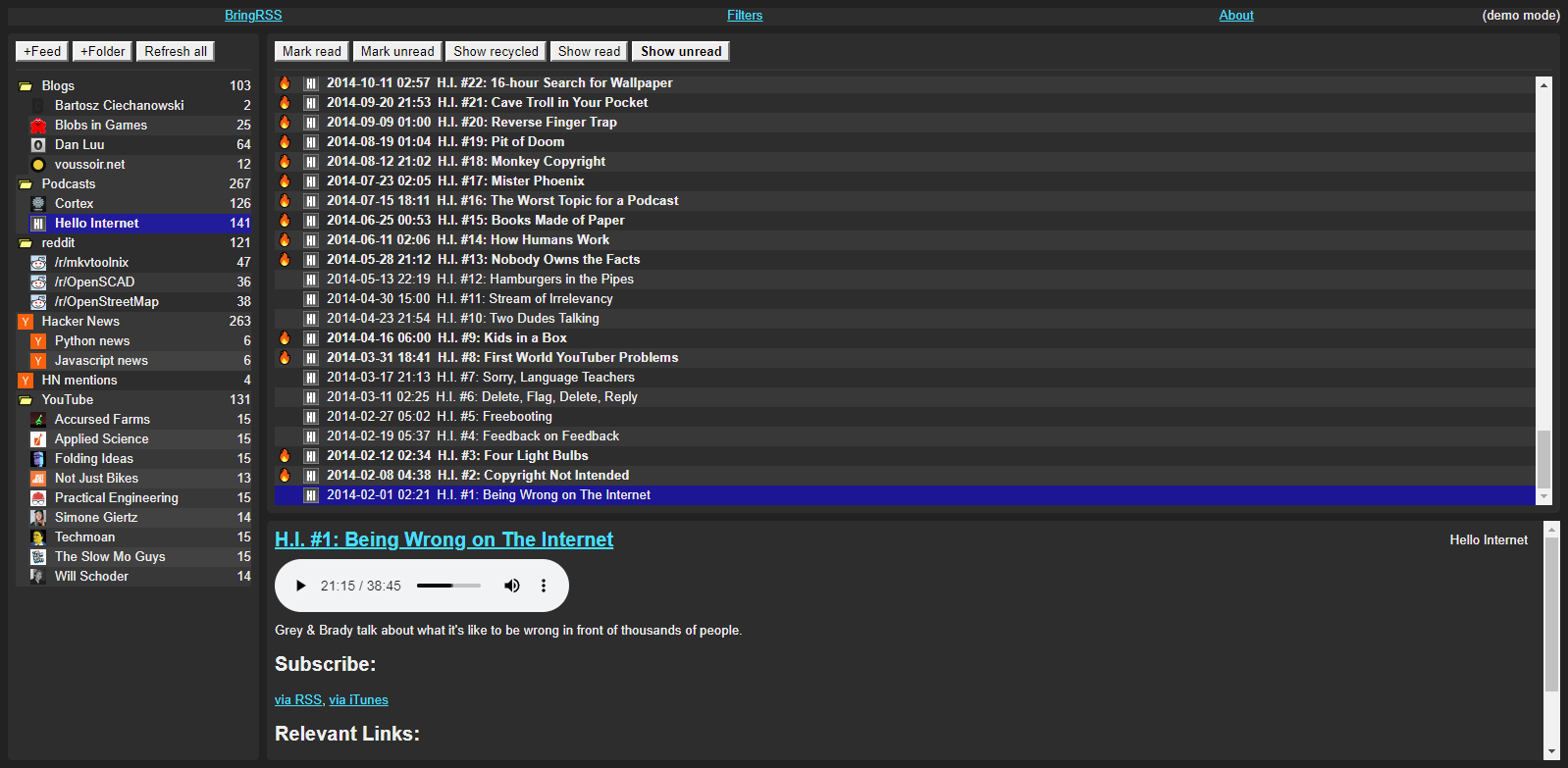

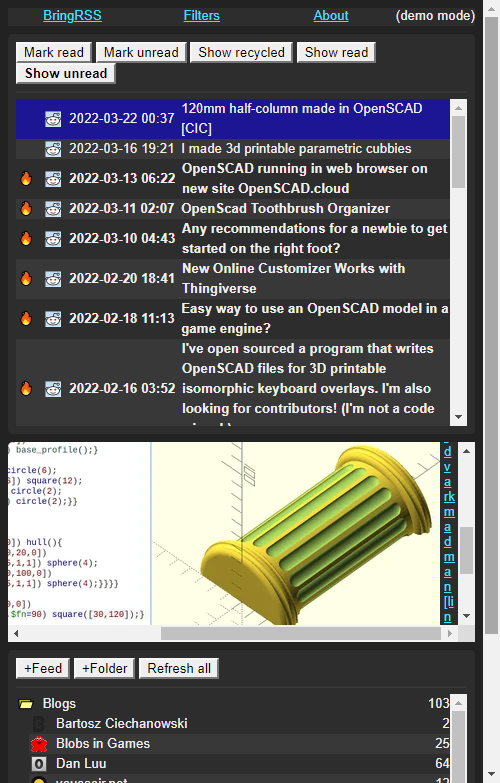

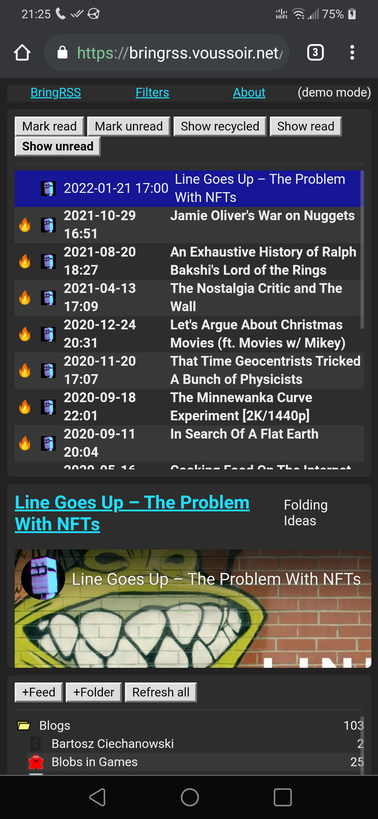

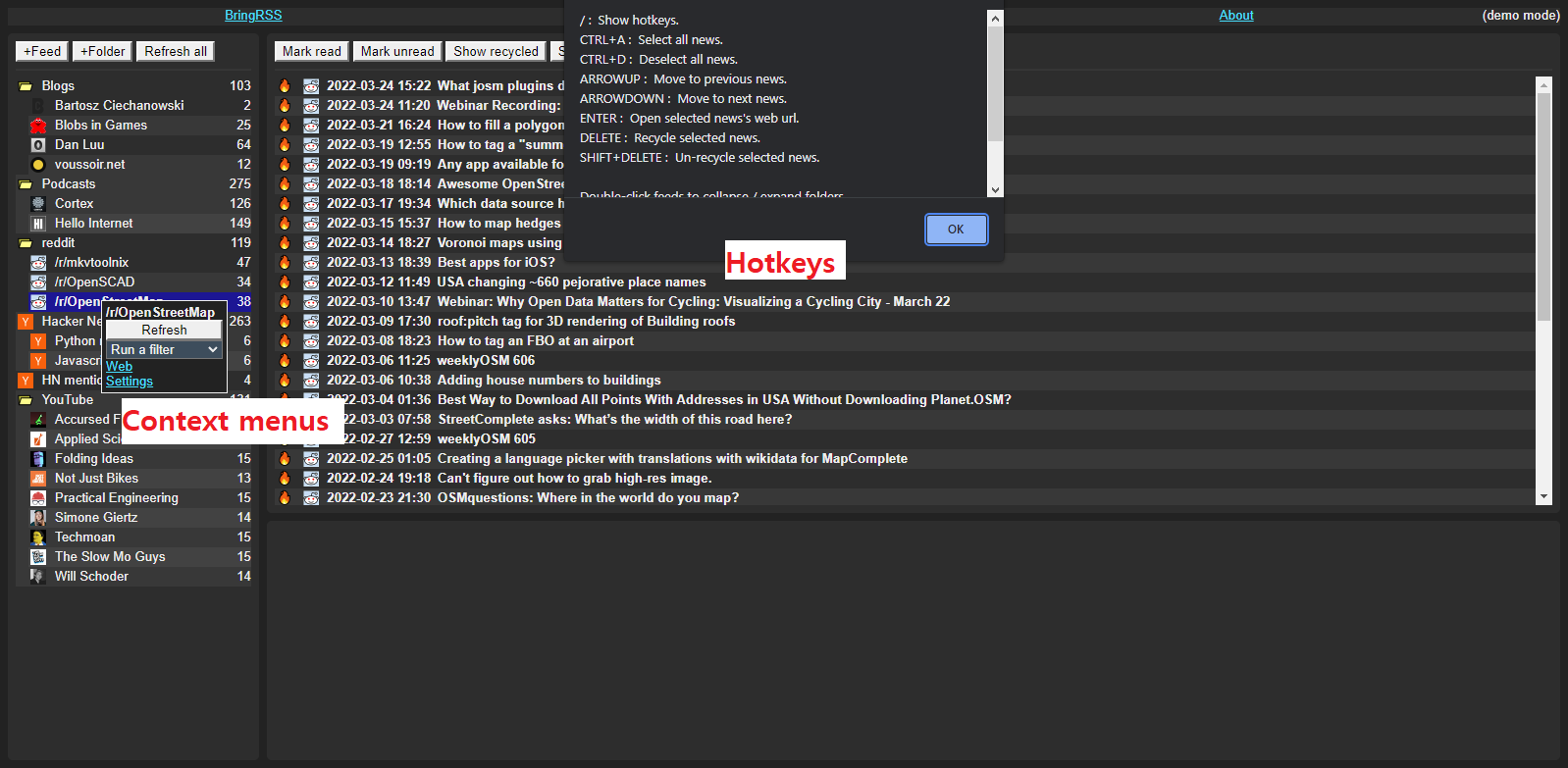

BringRSS is an RSS client / newsreader made with Python, SQLite3, and Flask. Its main features are:

|

||||

|

||||

- Automatic feed refresh with separate intervals per feed.

|

||||

- Feeds arranged in hierarchical folders.

|

||||

- Filters for categorizing or removing news based on your criteria.

|

||||

- Sends news objects to your own Python scripts for arbitrary post-processing, emailing, downloading, etc.

|

||||

- Embeds videos from YouTube feeds.

|

||||

- News text is filtered by [DOMPurify](https://github.com/cure53/DOMPurify) before display.

|

||||

- Supports multiple enclosures.

|

||||

|

||||

Because BringRSS runs a webserver, you can access it from every device in your house via your computer's LAN IP. BringRSS provides no login or authentication, but if you have a reverse proxy handle that for you, you could run BringRSS on an internet-connected machine and access your feeds anywhere.

|

||||

|

||||

## Screenshots

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

## Setting up

|

||||

|

||||

As you'll see below, BringRSS has a core backend package and separate frontends that use it. These frontend applications will use `import bringrss` to access the backend code. Therefore, the `bringrss` package needs to be in the right place for Python to find it for `import`.

|

||||

|

||||

1. Run `pip install -r requirements.txt --upgrade` after reading the file and deciding you are ok with the dependencies.

|

||||

|

||||

2. Make a new folder somewhere on your computer, and add this folder to your `PYTHONPATH` environment variable. For example, I might use `D:\pythonpath` or `~/pythonpath`. Close and re-open your Command Prompt / Terminal so it reloads the environment variables.

|

||||

|

||||

3. Add a symlink to the bringrss folder into that folder:

|

||||

|

||||

The repository you are looking at now is `D:\Git\BringRSS` or `~/Git/BringRSS`. You can see the folder called `bringrss`.

|

||||

|

||||

Windows: `mklink /d fakepath realpath`

|

||||

for example `mklink /d "D:\pythonpath\bringrss" "D:\Git\BringRSS\bringrss"`

|

||||

|

||||

Linux: `ln --symbolic realpath fakepath`

|

||||

for example `ln --symbolic "~/Git/BringRSS/bringrss" "~/pythonpath/bringrss"`

|

||||

|

||||

4. Run `python -c "import bringrss; print(bringrss)"`. You should see the module print successfully.

|

||||

|

||||

## Running BringRSS CLI

|

||||

|

||||

BringRSS offers a commandline interface so you can use cronjobs to refresh your feeds. More commands may be added in the future.

|

||||

|

||||

1. `cd` to the folder where you'd like to create the BringRSS database.

|

||||

|

||||

2. Run `python frontends/bringrss_cli.py --help` to learn about the available commands.

|

||||

|

||||

3. Run `python frontends/bringrss_cli.py init` to create a database in the current directory.

|

||||

|

||||

Note: Do not `cd` into the frontends folder. Stay in the folder that contains your `_bringrss` database and specify the full path of the frontend launcher. For example:

|

||||

|

||||

Windows:

|

||||

D:\somewhere> python D:\Git\BringRSS\frontends\bringrss_cli.py

|

||||

|

||||

Linux:

|

||||

/somewhere $ python /Git/BringRSS/frontends/bringrss_cli.py

|

||||

|

||||

It is expected that you create a shortcut file or launch script so you don't have to type the whole filepath every time. For example, I have a `bringcli.lnk` on my PATH with `target=D:\Git\BringRSS\frontends\bringrss_cli.py`.

|

||||

|

||||

## Running BringRSS Flask locally

|

||||

|

||||

1. Run `python frontends/bringrss_flask/bringrss_flask_dev.py --help` to learn the available options.

|

||||

|

||||

2. Run `python frontends/bringrss_flask/bringrss_flask_dev.py [port]` to launch the flask server. If this is your first time running it, you can add `--init` to create a new database in the current directory. Port defaults to 27464 if not provided.

|

||||

|

||||

3. Open your web browser to `localhost:<port>`.

|

||||

|

||||

Note: Do not `cd` into the frontends folder. Stay in the folder that contains your `_bringrss` database and specify the full path of the frontend launcher. For example:

|

||||

|

||||

Windows:

|

||||

D:\somewhere> python D:\Git\BringRSS\frontends\bringrss_flask\bringrss_flask_dev.py 5001

|

||||

|

||||

Linux:

|

||||

/somewhere $ python /Git/BringRSS/frontends/bringrss_flask/bringrss_flask_dev.py 5001

|

||||

|

||||

Add `--help` to learn the arguments.

|

||||

|

||||

It is expected that you create a shortcut file or launch script so you don't have to type the whole filepath every time. For example, I have a `bringflask.lnk` on my PATH with `target=D:\Git\BringRSS\frontends\bringrss_flask\bringrss_flask_dev.py`.

|

||||

|

||||

## Running BringRSS Flask with Gunicorn

|

||||

|

||||

BringRSS provides no authentication whatsoever, so you probably shouldn't deploy it publicly unless your proxy server does authentication for you. However, I will tell you that for the purposes of running the demo site, I am using a script like this:

|

||||

|

||||

export BRINGRSS_DEMO_MODE=1

|

||||

~/cmd/python ~/cmd/gunicorn_py bringrss_flask_prod:site --bind "0.0.0.0:PORTNUMBER" --worker-class gevent --access-logfile "-" --access-logformat "%(h)s | %(t)s | %(r)s | %(s)s %(b)s"

|

||||

|

||||

## Running BringRSS REPL

|

||||

|

||||

The REPL is a great way to test a quick idea and learn the data model.

|

||||

|

||||

1. Use `bringrss_cli init` to create the database in the desired directory.

|

||||

|

||||

2. Run `python frontends/bringrss_repl.py` to launch the Python interpreter with the BringDB pre-loaded into a variable called `B`. Try things like `B.get_feed` or `B.get_newss`.

|

||||

|

||||

Note: Do not `cd` into the frontends folder. Stay in the folder that contains your `_bringrss` database and specify the full path of the frontend launcher. For example:

|

||||

|

||||

Windows:

|

||||

D:\somewhere> python D:\Git\BringRSS\frontends\bringrss_repl.py

|

||||

|

||||

Linux:

|

||||

/somewhere $ python /Git/BringRSS/frontends/bringrss_repl.py

|

||||

|

||||

It is expected that you create a shortcut file or launch script so you don't have to type the whole filepath every time. For example, I have a `bringrepl.lnk` on my PATH with `target=D:\Git\BringRSS\frontends\bringrss_repl.py`.

|

||||

|

||||

## Help wanted: javascript perf & layout thrashing

|

||||

|

||||

I think there is room for improvement in [root.html](https://github.com/voussoir/bringrss/blob/master/frontends/bringrss_flask/templates/root.html)'s javascript. When reading a feed with a few thousand news items, the UI starts to get slow at every interaction:

|

||||

|

||||

- After clicking on a news, it takes a few ms before it turns selected.

|

||||

- The newsreader takes a few ms to populate with the title even though it's pulled from the news's DOM, not the network.

|

||||

- After receiving the news list from the server, news are inserted into the dom in batches, and each batch causes the UI to stutter if you are also trying to scroll or click on things.

|

||||

|

||||

If you have any tips for improving the performance and responsiveness of the UI click handlers and reducing the amount of reflow / layout caused by the loading of news items or changing their class (selecting, reading, recycling), I would appreciate you getting in touch at contact@voussoir.net or opening an issue. Please don't open a pull request without talking to me first.

|

||||

|

||||

I am aware of virtual scrolling techniques where DOM rows don't actually exist until you scroll to where they would be, but this has the drawback of breaking ctrl+f and also it is hard to precompute the scroll height since news have variable length titles. I would prefer simple fixes like adding CSS rules that help the layout engine make better reflow decisions.

|

||||

|

||||

## To do list

|

||||

|

||||

- Maybe we could add a very basic password system to facilitate running an internet-connected instance. No user profiles, just a single password to access the whole system. I did this with [simpleserver](https://github.com/voussoir/else/blob/master/SimpleServer/simpleserver.py).

|

||||

- "Fill in the gaps" feature. Many websites have feeds that don't reach back all the way to their first post. When discovering a new blog or podcast, catching up on their prior work requires manual bookmarking outside of your newsreader. It would be nice to dump a list of article URLs into BringRSS and have it generate news objects as if they really came from the feed. Basic information like url, title, and fetched page text would be good enough; auto-detecting media as enclosures would be better. Other attributes don't need to be comprehensive. Then you could have everything in your newsreader.

|

||||

|

||||

## Mirrors

|

||||

|

||||

https://github.com/voussoir/bringrss

|

||||

|

||||

https://gitlab.com/voussoir/bringrss

|

||||

|

||||

https://codeberg.com/voussoir/bringrss

|

||||

5

bringrss/__init__.py

Normal file

5

bringrss/__init__.py

Normal file

|

|

@ -0,0 +1,5 @@

|

|||

from . import bringdb

|

||||

from . import constants

|

||||

from . import exceptions

|

||||

from . import helpers

|

||||

from . import objects

|

||||

722

bringrss/bringdb.py

Normal file

722

bringrss/bringdb.py

Normal file

|

|

@ -0,0 +1,722 @@

|

|||

import bs4

|

||||

import random

|

||||

import sqlite3

|

||||

import typing

|

||||

|

||||

from . import constants

|

||||

from . import exceptions

|

||||

from . import helpers

|

||||

from . import objects

|

||||

|

||||

from voussoirkit import cacheclass

|

||||

from voussoirkit import pathclass

|

||||

from voussoirkit import sentinel

|

||||

from voussoirkit import sqlhelpers

|

||||

from voussoirkit import vlogging

|

||||

from voussoirkit import worms

|

||||

|

||||

log = vlogging.get_logger(__name__)

|

||||

|

||||

RNG = random.SystemRandom()

|

||||

|

||||

####################################################################################################

|

||||

|

||||

class BDBFeedMixin:

|

||||

def __init__(self):

|

||||

super().__init__()

|

||||

|

||||

@worms.transaction

|

||||

def add_feed(

|

||||

self,

|

||||

*,

|

||||

autorefresh_interval=86400,

|

||||

description=None,

|

||||

icon=None,

|

||||

isolate_guids=False,

|

||||

parent=None,

|

||||

refresh_with_others=True,

|

||||

rss_url=None,

|

||||

title=None,

|

||||

web_url=None,

|

||||

ui_order_rank=None,

|

||||

):

|

||||

if parent is None:

|

||||

parent_id = None

|

||||

else:

|

||||

if not isinstance(parent, objects.Feed):

|

||||

raise TypeError(parent)

|

||||

parent.assert_not_deleted()

|

||||

parent_id = parent.id

|

||||

|

||||

autorefresh_interval = objects.Feed.normalize_autorefresh_interval(autorefresh_interval)

|

||||

refresh_with_others = objects.Feed.normalize_refresh_with_others(refresh_with_others)

|

||||

rss_url = objects.Feed.normalize_rss_url(rss_url)

|

||||

web_url = objects.Feed.normalize_web_url(web_url)

|

||||

title = objects.Feed.normalize_title(title)

|

||||

description = objects.Feed.normalize_description(description)

|

||||

icon = objects.Feed.normalize_icon(icon)

|

||||

isolate_guids = objects.Feed.normalize_isolate_guids(isolate_guids)

|

||||

if ui_order_rank is None:

|

||||

ui_order_rank = self.get_last_ui_order_rank() + 1

|

||||

else:

|

||||

ui_order_rank = objects.Feed.normalize_ui_order_rank(ui_order_rank)

|

||||

|

||||

data = {

|

||||

'id': self.generate_id(objects.Feed),

|

||||

'parent_id': parent_id,

|

||||

'rss_url': rss_url,

|

||||

'web_url': web_url,

|

||||

'title': title,

|

||||

'description': description,

|

||||

'created': helpers.now(),

|

||||

'refresh_with_others': refresh_with_others,

|

||||

'last_refresh': 0,

|

||||

'last_refresh_attempt': 0,

|

||||

'last_refresh_error': None,

|

||||

'autorefresh_interval': autorefresh_interval,

|

||||

'http_headers': None,

|

||||

'isolate_guids': isolate_guids,

|

||||

'icon': icon,

|

||||

'ui_order_rank': ui_order_rank,

|

||||

}

|

||||

self.insert(table=objects.Feed, data=data)

|

||||

feed = self.get_cached_instance(objects.Feed, data)

|

||||

return feed

|

||||

|

||||

def get_bulk_unread_counts(self):

|

||||

'''

|

||||

Instead of calling feed.get_unread_count() on many separate feed objects

|

||||

and performing lots of duplicate work, you can call here and get them

|

||||

all at once with much less database access. I brought my /feeds.json

|

||||

down from 160ms to 6ms by using this.

|

||||

|

||||

Missing keys means 0 unread.

|

||||

'''

|

||||

# Even though we have api functions for all of this, I want to squeeze

|

||||

# out the perf. This function is meant to be used in situations where

|

||||

# speed matters more than code beauty.

|

||||

feeds = {feed.id: feed for feed in self.get_feeds()}

|

||||

childs = {}

|

||||

for feed in feeds.values():

|

||||

if feed.parent_id:

|

||||

childs.setdefault(feed.parent_id, []).append(feed)

|

||||

roots = [feed for feed in feeds.values() if not feed.parent_id]

|

||||

|

||||

query = '''

|

||||

SELECT feed_id, COUNT(rowid)

|

||||

FROM news

|

||||

WHERE recycled == 0 AND read == 0

|

||||

GROUP BY feed_id

|

||||

'''

|

||||

counts = {feeds[feed_id]: count for (feed_id, count) in self.select(query)}

|

||||

|

||||

def recursive_update(feed):

|

||||

counts.setdefault(feed, 0)

|

||||

children = childs.get(feed.id, None)

|

||||

if children:

|

||||

counts[feed] += sum(recursive_update(child) for child in children)

|

||||

pass

|

||||

return counts[feed]

|

||||

|

||||

for root in roots:

|

||||

recursive_update(root)

|

||||

|

||||

return counts

|

||||

|

||||

def get_feed(self, id) -> objects.Feed:

|

||||

return self.get_object_by_id(objects.Feed, id)

|

||||

|

||||

def get_feed_count(self) -> int:

|

||||

return self.select_one_value('SELECT COUNT(id) FROM feeds')

|

||||

|

||||

def get_feeds(self) -> typing.Iterable[objects.Feed]:

|

||||

query = 'SELECT * FROM feeds ORDER BY ui_order_rank ASC'

|

||||

return self.get_objects_by_sql(objects.Feed, query)

|

||||

|

||||

def get_feeds_by_id(self, ids) -> typing.Iterable[objects.Feed]:

|

||||

return self.get_objects_by_id(objects.Feed, ids)

|

||||

|

||||

def get_feeds_by_sql(self, query, bindings=None) -> typing.Iterable[objects.Feed]:

|

||||

return self.get_objects_by_sql(objects.Feed, query, bindings)

|

||||

|

||||

def get_last_ui_order_rank(self) -> int:

|

||||

query = 'SELECT ui_order_rank FROM feeds ORDER BY ui_order_rank DESC LIMIT 1'

|

||||

rank = self.select_one_value(query)

|

||||

if rank is None:

|

||||

return 0

|

||||

return rank

|

||||

|

||||

def get_root_feeds(self) -> typing.Iterable[objects.Feed]:

|

||||

query = 'SELECT * FROM feeds WHERE parent_id IS NULL ORDER BY ui_order_rank ASC'

|

||||

return self.get_objects_by_sql(objects.Feed, query)

|

||||

|

||||

@worms.transaction

|

||||

def reassign_ui_order_ranks(self):

|

||||

feeds = list(self.get_root_feeds())

|

||||

rank = 1

|

||||

for feed in feeds:

|

||||

for descendant in feed.walk_children():

|

||||

descendant.set_ui_order_rank(rank)

|

||||

rank += 1

|

||||

|

||||

####################################################################################################

|

||||

|

||||

class BDBFilterMixin:

|

||||

def __init__(self):

|

||||

super().__init__()

|

||||

|

||||

@worms.transaction

|

||||

def add_filter(self, name, conditions, actions):

|

||||

name = objects.Filter.normalize_name(name)

|

||||

conditions = objects.Filter.normalize_conditions(conditions)

|

||||

actions = objects.Filter.normalize_actions(actions)

|

||||

objects.Filter._jank_validate_move_to_feed(bringdb=self, actions=actions)

|

||||

|

||||

data = {

|

||||

'id': self.generate_id(objects.Filter),

|

||||

'name': name,

|

||||

'created': helpers.now(),

|

||||

'conditions': conditions,

|

||||

'actions': actions,

|

||||

}

|

||||

self.insert(table=objects.Filter, data=data)

|

||||

filt = self.get_cached_instance(objects.Filter, data)

|

||||

return filt

|

||||

|

||||

def get_filter(self, id) -> objects.Filter:

|

||||

return self.get_object_by_id(objects.Filter, id)

|

||||

|

||||

def get_filter_count(self) -> int:

|

||||

return self.select_one_value('SELECT COUNT(id) FROM filters')

|

||||

|

||||

def get_filters(self) -> typing.Iterable[objects.Filter]:

|

||||

return self.get_objects(objects.Filter)

|

||||

|

||||

def get_filters_by_id(self, ids) -> typing.Iterable[objects.Filter]:

|

||||

return self.get_objects_by_id(objects.Filter, ids)

|

||||

|

||||

def get_filters_by_sql(self, query, bindings=None) -> typing.Iterable[objects.Filter]:

|

||||

return self.get_objects_by_sql(objects.Filter, query, bindings)

|

||||

|

||||

@worms.transaction

|

||||

def process_news_through_filters(self, news):

|

||||

def prepare_filters(feed):

|

||||

filters = []

|

||||

for ancestor in feed.walk_parents(yield_self=True):

|

||||

filters.extend(ancestor.get_filters())

|

||||

return filters

|

||||

|

||||

feed = news.feed

|

||||

original_feed = feed

|

||||

filters = prepare_filters(feed)

|

||||

status = objects.Filter.THEN_CONTINUE_FILTERS

|

||||

too_many_switches = 20

|

||||

|

||||

while feed and filters and status is objects.Filter.THEN_CONTINUE_FILTERS:

|

||||

filt = filters.pop(0)

|

||||

status = filt.process_news(news)

|

||||

|

||||

switched_feed = news.feed

|

||||

if switched_feed == feed:

|

||||

continue

|

||||

|

||||

feed = switched_feed

|

||||

filters = prepare_filters(feed)

|

||||

|

||||

too_many_switches -= 1

|

||||

if too_many_switches > 0:

|

||||

continue

|

||||

raise Exception(f'{news} from {original_feed} got moved too many times. Something wrong?')

|

||||

|

||||

####################################################################################################

|

||||

|

||||

class BDBNewsMixin:

|

||||

DUPLICATE_BAIL = sentinel.Sentinel('duplicate bail')

|

||||

|

||||

def __init__(self):

|

||||

super().__init__()

|

||||

|

||||

@worms.transaction

|

||||

def add_news(

|

||||

self,

|

||||

*,

|

||||

authors,

|

||||

comments_url,

|

||||

enclosures,

|

||||

feed,

|

||||

published,

|

||||

rss_guid,

|

||||

text,

|

||||

title,

|

||||

updated,

|

||||

web_url,

|

||||

):

|

||||

if not isinstance(feed, objects.Feed):

|

||||

raise TypeError(feed)

|

||||

feed.assert_not_deleted()

|

||||

|

||||

rss_guid = objects.News.normalize_rss_guid(rss_guid)

|

||||

if feed.isolate_guids:

|

||||

rss_guid = f'_isolate_{feed.id}_{rss_guid}'

|

||||

|

||||

published = objects.News.normalize_published(published)

|

||||

updated = objects.News.normalize_updated(updated)

|

||||

title = objects.News.normalize_title(title)

|

||||

text = objects.News.normalize_text(text)

|

||||

web_url = objects.News.normalize_web_url(web_url)

|

||||

comments_url = objects.News.normalize_comments_url(comments_url)

|

||||

authors = objects.News.normalize_authors_json(authors)

|

||||

enclosures = objects.News.normalize_enclosures_json(enclosures)

|

||||

|

||||

data = {

|

||||

'id': self.generate_id(objects.News),

|

||||

'feed_id': feed.id,

|

||||

'original_feed_id': feed.id,

|

||||

'rss_guid': rss_guid,

|

||||

'published': published,

|

||||

'updated': updated,

|

||||

'title': title,

|

||||

'text': text,

|

||||

'web_url': web_url,

|

||||

'comments_url': comments_url,

|

||||

'created': helpers.now(),

|

||||

'read': False,

|

||||

'recycled': False,

|

||||

'authors': authors,

|

||||

'enclosures': enclosures,

|

||||

}

|

||||

self.insert(table=objects.News, data=data)

|

||||

news = self.get_cached_instance(objects.News, data)

|

||||

return news

|

||||

|

||||

def get_news(self, id) -> objects.News:

|

||||

return self.get_object_by_id(objects.News, id)

|

||||

|

||||

def get_news_count(self) -> int:

|

||||

return self.select_one_value('SELECT COUNT(id) FROM news')

|

||||

|

||||

def get_newss(

|

||||

self,

|

||||

*,

|

||||

read=False,

|

||||

recycled=False,

|

||||

feed=None,

|

||||

) -> typing.Iterable[objects.News]:

|

||||

|

||||

if feed is not None and not isinstance(feed, objects.Feed):

|

||||

feed = self.get_feed(feed)

|

||||

|

||||

wheres = []

|

||||

bindings = []

|

||||

|

||||

if feed:

|

||||

feed_ids = [descendant.id for descendant in feed.walk_children()]

|

||||

wheres.append(f'feed_id IN {sqlhelpers.listify(feed_ids)}')

|

||||

|

||||

if recycled is True:

|

||||

wheres.append('recycled == 1')

|

||||

elif recycled is False:

|

||||

wheres.append('recycled == 0')

|

||||

|

||||

if read is True:

|

||||

wheres.append('read == 1')

|

||||

elif read is False:

|

||||

wheres.append('read == 0')

|

||||

if wheres:

|

||||

wheres = ' AND '.join(wheres)

|

||||

wheres = ' WHERE ' + wheres

|

||||

else:

|

||||

wheres = ''

|

||||

query = 'SELECT * FROM news' + wheres + ' ORDER BY published DESC'

|

||||

|

||||

rows = self.select(query, bindings)

|

||||

for row in rows:

|

||||

yield self.get_cached_instance(objects.News, row)

|

||||

|

||||

def get_newss_by_id(self, ids) -> typing.Iterable[objects.News]:

|

||||

return self.get_objects_by_id(objects.News, ids)

|

||||

|

||||

def get_newss_by_sql(self, query, bindings=None) -> typing.Iterable[objects.News]:

|

||||

return self.get_objects_by_sql(objects.News, query, bindings)

|

||||

|

||||

def _get_duplicate_news(self, feed, guid):

|

||||

if feed.isolate_guids:

|

||||

guid = f'_isolate_{feed.id}_{guid}'

|

||||

|

||||

match = self.select_one('SELECT * FROM news WHERE rss_guid == ?', [guid])

|

||||

if match is None:

|

||||

return None

|

||||

|

||||

return self.get_cached_instance(objects.News, match)

|

||||

|

||||

def _ingest_one_news_atom(self, entry, feed):

|

||||

rss_guid = entry.id

|

||||

|

||||

web_url = helpers.pick_web_url_atom(entry)

|

||||

|

||||

updated = entry.updated

|

||||

if updated is not None:

|

||||

updated = updated.text

|

||||

updated = helpers.dateutil_parse(updated)

|

||||

updated = updated.timestamp()

|

||||

|

||||

published = entry.published

|

||||

if published is not None:

|

||||

published = published.text

|

||||

published = helpers.dateutil_parse(published)

|

||||

published = published.timestamp()

|

||||

elif updated is not None:

|

||||

published = updated

|

||||

|

||||

title = entry.find('title')

|

||||

if title:

|

||||

title = title.text.strip()

|

||||

|

||||

if rss_guid:

|

||||

rss_guid = rss_guid.text.strip()

|

||||

elif web_url:

|

||||

rss_guid = web_url

|

||||

elif title:

|

||||

rss_guid = title

|

||||

elif published:

|

||||

rss_guid = published

|

||||

|

||||

if not rss_guid:

|

||||

raise exceptions.NoGUID(entry)

|

||||

|

||||

duplicate = self._get_duplicate_news(feed=feed, guid=rss_guid)

|

||||

if duplicate:

|

||||

log.loud('Skipping duplicate feed=%s, guid=%s', feed.id, rss_guid)

|

||||

return BDBNewsMixin.DUPLICATE_BAIL

|

||||

|

||||

text = entry.find('content')

|

||||

if text:

|

||||

text = text.text.strip()

|

||||

|

||||

comments_url = None

|

||||

|

||||

raw_authors = entry.find_all('author')

|

||||

authors = []

|

||||

for raw_author in raw_authors:

|

||||

author = {

|

||||

'name': raw_author.find('name'),

|

||||

'email': raw_author.find('email'),

|

||||

'uri': raw_author.find('uri'),

|

||||

}

|

||||

author = {key:(value.text if value else None) for (key, value) in author.items()}

|

||||

authors.append(author)

|

||||

|

||||

raw_enclosures = entry.find_all('link', {'rel': 'enclosure'})

|

||||

enclosures = []

|

||||

for raw_enclosure in raw_enclosures:

|

||||

enclosure = {

|

||||

'type': raw_enclosure.get('type', None),

|

||||

'url': raw_enclosure.get('href', None),

|

||||

'size': raw_enclosure.get('length', None),

|

||||

}

|

||||

if enclosure.get('size') is not None:

|

||||

enclosure['size'] = int(enclosure['size'])

|

||||

|

||||

enclosures.append(enclosure)

|

||||

|

||||

news = self.add_news(

|

||||

authors=authors,

|

||||

comments_url=comments_url,

|

||||

enclosures=enclosures,

|

||||

feed=feed,

|

||||

published=published,

|

||||

rss_guid=rss_guid,

|

||||

text=text,

|

||||

title=title,

|

||||

updated=updated,

|

||||

web_url=web_url,

|

||||

)

|

||||

return news

|

||||

|

||||

def _ingest_one_news_rss(self, item, feed):

|

||||

rss_guid = item.find('guid')

|

||||

|

||||

title = item.find('title')

|

||||

if title:

|

||||

title = title.text.strip()

|

||||

|

||||

text = item.find('description')

|

||||

if text:

|

||||

text = text.text.strip()

|

||||

|

||||

web_url = item.find('link')

|

||||

if web_url:

|

||||

web_url = web_url.text.strip()

|

||||

elif rss_guid and rss_guid.get('isPermalink'):

|

||||

web_url = rss_guid.text

|

||||

|

||||

if web_url and '://' not in web_url:

|

||||

web_url = None

|

||||

|

||||

published = item.find('pubDate')

|

||||

if published:

|

||||

published = published.text

|

||||

published = helpers.dateutil_parse(published)

|

||||

published = published.timestamp()

|

||||

else:

|

||||

published = 0

|

||||

|

||||

if rss_guid:

|

||||

rss_guid = rss_guid.text.strip()

|

||||

elif web_url:

|

||||

rss_guid = web_url

|

||||

elif title:

|

||||

rss_guid = f'{feed.id}_{title}'

|

||||

elif published:

|

||||

rss_guid = f'{feed.id}_{published}'

|

||||

|

||||

if not rss_guid:

|

||||

raise exceptions.NoGUID(item)

|

||||

|

||||

duplicate = self._get_duplicate_news(feed=feed, guid=rss_guid)

|

||||

if duplicate:

|

||||

log.loud('Skipping duplicate news, feed=%s, guid=%s', feed.id, rss_guid)

|

||||

return BDBNewsMixin.DUPLICATE_BAIL

|

||||

|

||||

comments_url = item.find('comments')

|

||||

if comments_url is not None:

|

||||

comments_url = comments_url.text

|

||||

|

||||

raw_authors = item.find_all('author')

|

||||

authors = []

|

||||

for raw_author in raw_authors:

|

||||

author = raw_author.text.strip()

|

||||

if author:

|

||||

author = {

|

||||

'name': author,

|

||||

}

|

||||

authors.append(author)

|

||||

|

||||

raw_enclosures = item.find_all('enclosure')

|

||||

enclosures = []

|

||||

for raw_enclosure in raw_enclosures:

|

||||

enclosure = {

|

||||

'type': raw_enclosure.get('type', None),

|

||||

'url': raw_enclosure.get('url', None),

|

||||

'size': raw_enclosure.get('length', None),

|

||||

}

|

||||

|

||||

if enclosure.get('size') is not None:

|

||||

enclosure['size'] = int(enclosure['size'])

|

||||

|

||||

enclosures.append(enclosure)

|

||||

|

||||

news = self.add_news(

|

||||

authors=authors,

|

||||

comments_url=comments_url,

|

||||

enclosures=enclosures,

|

||||

feed=feed,

|

||||

published=published,

|

||||

rss_guid=rss_guid,

|

||||

text=text,

|

||||

title=title,

|

||||

updated=published,

|

||||

web_url=web_url,

|

||||

)

|

||||

return news

|

||||

|

||||

def _ingest_news_atom(self, soup, feed):

|

||||

atom_feed = soup.find('feed')

|

||||

|

||||

if not atom_feed:

|

||||

raise exceptions.BadXML('No feed element.')

|

||||

|

||||

for entry in atom_feed.find_all('entry'):

|

||||

news = self._ingest_one_news_atom(entry, feed)

|

||||

if news is not BDBNewsMixin.DUPLICATE_BAIL:

|

||||

yield news

|

||||

|

||||

def _ingest_news_rss(self, soup, feed):

|

||||

rss = soup.find('rss')

|

||||

|

||||

# This won't happen under normal circumstances since Feed.refresh would

|

||||

# have raised already. But including these checks here in case user

|

||||

# calls directly.

|

||||

if not rss:

|

||||

raise exceptions.BadXML('No rss element.')

|

||||

|

||||

channel = rss.find('channel')

|

||||

|

||||

if not channel:

|

||||

raise exceptions.BadXML('No channel element.')

|

||||

|

||||

for item in channel.find_all('item'):

|

||||

news = self._ingest_one_news_rss(item, feed)

|

||||

if news is not BDBNewsMixin.DUPLICATE_BAIL:

|

||||

yield news

|

||||

|

||||

@worms.transaction

|

||||

def ingest_news_xml(self, soup:bs4.BeautifulSoup, feed):

|

||||

if soup.rss:

|

||||

newss = self._ingest_news_rss(soup, feed)

|

||||

elif soup.feed:

|

||||

newss = self._ingest_news_atom(soup, feed)

|

||||

else:

|

||||

raise exceptions.NeitherAtomNorRSS(soup)

|

||||

|

||||

for news in newss:

|

||||

self.process_news_through_filters(news)

|

||||

|

||||

####################################################################################################

|

||||

|

||||

class BringDB(

|

||||

BDBFeedMixin,

|

||||

BDBFilterMixin,

|

||||

BDBNewsMixin,

|

||||

worms.DatabaseWithCaching,

|

||||

):

|

||||

def __init__(

|

||||

self,

|

||||

data_directory=None,

|

||||

*,

|

||||

create=False,

|

||||

skip_version_check=False,

|

||||

):

|

||||

'''

|

||||

data_directory:

|

||||

This directory will contain the sql file and anything else needed by

|

||||

the process. The directory is the database for all intents

|

||||

and purposes.

|

||||

|

||||

create:

|

||||

If True, the data_directory will be created if it does not exist.

|

||||

If False, we expect that data_directory and the sql file exist.

|

||||

|

||||

skip_version_check:

|

||||

Skip the version check so that you don't get DatabaseOutOfDate.

|

||||

Beware of modifying any data in this state.

|

||||

'''

|

||||

super().__init__()

|

||||

|

||||

# DATA DIR PREP

|

||||

if data_directory is not None:

|

||||

pass

|

||||

else:

|

||||

data_directory = pathclass.cwd().with_child(constants.DEFAULT_DATADIR)

|

||||

|

||||

if isinstance(data_directory, str):

|

||||

data_directory = helpers.remove_path_badchars(data_directory, allowed=':/\\')

|

||||

self.data_directory = pathclass.Path(data_directory)

|

||||

|

||||

if self.data_directory.exists and not self.data_directory.is_dir:

|

||||

raise exceptions.BadDataDirectory(self.data_directory.absolute_path)

|

||||

|

||||

# DATABASE / WORMS

|

||||

self._init_sql(create=create, skip_version_check=skip_version_check)

|

||||

|

||||

# WORMS

|

||||

self.id_type = int

|

||||

self._init_column_index()

|

||||

self._init_caches()

|

||||

|

||||

def _check_version(self):

|

||||

'''

|

||||

Compare database's user_version against constants.DATABASE_VERSION,

|

||||

raising exceptions.DatabaseOutOfDate if not correct.

|

||||

'''

|

||||

existing = self.execute('PRAGMA user_version').fetchone()[0]

|

||||

if existing != constants.DATABASE_VERSION:

|

||||

raise exceptions.DatabaseOutOfDate(

|

||||

existing=existing,

|

||||

new=constants.DATABASE_VERSION,

|

||||

filepath=self.data_directory,

|

||||

)

|

||||

|

||||

def _first_time_setup(self):

|

||||

log.info('Running first-time database setup.')

|

||||

self.executescript(constants.DB_INIT)

|

||||

self.commit()

|

||||

|

||||

def _init_caches(self):

|

||||

self.caches = {

|

||||

objects.Feed: cacheclass.Cache(maxlen=2000),

|

||||

objects.Filter: cacheclass.Cache(maxlen=1000),

|

||||

objects.News: cacheclass.Cache(maxlen=20000),

|

||||

}

|

||||

|

||||

def _init_column_index(self):

|

||||

self.COLUMNS = constants.SQL_COLUMNS

|

||||

self.COLUMN_INDEX = constants.SQL_INDEX

|

||||

|

||||

def _init_sql(self, create, skip_version_check):

|

||||

self.database_filepath = self.data_directory.with_child(constants.DEFAULT_DBNAME)

|

||||

existing_database = self.database_filepath.exists

|

||||

|

||||

if not existing_database and not create:

|

||||

msg = f'"{self.database_filepath.absolute_path}" does not exist and create is off.'

|

||||

raise FileNotFoundError(msg)

|

||||

|

||||

self.data_directory.makedirs(exist_ok=True)

|

||||

log.debug('Connecting to sqlite file "%s".', self.database_filepath.absolute_path)

|

||||

self.sql = sqlite3.connect(self.database_filepath.absolute_path)

|

||||

self.sql.row_factory = sqlite3.Row

|

||||

|

||||

if existing_database:

|

||||

if not skip_version_check:

|

||||

self._check_version()

|

||||

self._load_pragmas()

|

||||

else:

|

||||

self._first_time_setup()

|

||||

|

||||

def _load_pragmas(self):

|

||||

log.debug('Reloading pragmas.')

|

||||

self.executescript(constants.DB_PRAGMAS)

|

||||

self.commit()

|

||||

|

||||

@classmethod

|

||||

def closest_bringdb(cls, path='.', *args, **kwargs):

|

||||

'''

|

||||

Starting from the given path and climbing upwards towards the filesystem

|

||||

root, look for an existing BringRSS data directory and return the

|

||||

BringDB object. If none exists, raise exceptions.NoClosestBringDB.

|

||||

'''

|

||||

path = pathclass.Path(path)

|

||||

starting = path

|

||||

|

||||

while True:

|

||||

possible = path.with_child(constants.DEFAULT_DATADIR)

|

||||

if possible.is_dir:

|

||||

break

|

||||

parent = path.parent

|

||||

if path == parent:

|

||||

raise exceptions.NoClosestBringDB(starting.absolute_path)

|

||||

path = parent

|

||||

|

||||

path = possible

|

||||

log.debug('Found closest BringDB at "%s".', path.absolute_path)

|

||||

bringdb = cls(

|

||||

data_directory=path,

|

||||

create=False,

|

||||

*args,

|

||||

**kwargs,

|

||||

)

|

||||

return bringdb

|

||||

|

||||

def __del__(self):

|

||||

self.close()

|

||||

|

||||

def __repr__(self):

|

||||

return f'BringDB(data_directory={self.data_directory})'

|

||||

|

||||

def close(self) -> None:

|

||||

super().close()

|

||||

|

||||

def generate_id(self, thing_class) -> int:

|

||||

'''

|

||||

Create a new ID number that is unique to the given table.

|

||||

'''

|

||||

if not issubclass(thing_class, objects.ObjectBase):

|

||||

raise TypeError(thing_class)

|

||||

|

||||

table = thing_class.table

|

||||

|

||||

while True:

|

||||

id = RNG.getrandbits(32)

|

||||

exists = self.select_one(f'SELECT 1 FROM {table} WHERE id == ?', [id])

|

||||

if not exists:

|

||||

return id

|

||||

349

bringrss/constants.py

Normal file

349

bringrss/constants.py

Normal file

|

|

@ -0,0 +1,349 @@

|

|||

import requests

|

||||

|

||||

from voussoirkit import sqlhelpers

|

||||

|

||||

DATABASE_VERSION = 1

|

||||

DB_VERSION_PRAGMA = f'''

|

||||

PRAGMA user_version = {DATABASE_VERSION};

|

||||

'''

|

||||

|

||||

DB_PRAGMAS = f'''

|

||||

-- 50 MB cache

|

||||

PRAGMA cache_size = -50000;

|

||||

PRAGMA foreign_keys = ON;

|

||||

'''

|

||||

|

||||

DB_INIT = f'''

|

||||

BEGIN;

|

||||

{DB_PRAGMAS}

|

||||

{DB_VERSION_PRAGMA}

|

||||

----------------------------------------------------------------------------------------------------

|

||||

CREATE TABLE IF NOT EXISTS feeds(

|

||||

id INT PRIMARY KEY NOT NULL,

|

||||

parent_id INT,

|

||||

rss_url TEXT,

|

||||

web_url TEXT,

|

||||

title TEXT,

|

||||

description TEXT,

|

||||

created INT,

|

||||

refresh_with_others INT NOT NULL,

|

||||

last_refresh INT NOT NULL,

|

||||

last_refresh_attempt INT NOT NULL,

|

||||

last_refresh_error TEXT,

|

||||

autorefresh_interval INT NOT NULL,

|

||||

http_headers TEXT,

|

||||

isolate_guids INT NOT NULL,

|

||||

icon BLOB,

|

||||

ui_order_rank INT

|

||||

);

|

||||

CREATE INDEX IF NOT EXISTS index_feeds_id on feeds(id);

|

||||

----------------------------------------------------------------------------------------------------

|

||||

CREATE TABLE IF NOT EXISTS filters(

|

||||

id INT PRIMARY KEY NOT NULL,

|

||||

name TEXT,

|

||||

created INT,

|

||||

conditions TEXT NOT NULL,

|

||||

actions TEXT NOT NULL

|

||||

);

|

||||

----------------------------------------------------------------------------------------------------

|

||||

CREATE TABLE IF NOT EXISTS news(

|

||||

id INT PRIMARY KEY NOT NULL,

|

||||

feed_id INT NOT NULL,

|

||||

original_feed_id INT NOT NULL,

|

||||

rss_guid TEXT NOT NULL,

|

||||

published INT,

|

||||

updated INT,

|

||||

title TEXT,

|

||||

text TEXT,

|

||||

web_url TEXT,

|

||||

comments_url TEXT,

|

||||

created INT,

|

||||

read INT NOT NULL,

|

||||

recycled INT NOT NULL,

|

||||

-- The authors and enclosures are stored as a JSON list of dicts. Normally I

|

||||

-- don't like to store JSON in my databases, but I'm really not interested

|

||||

-- in breaking this out in a many-to-many table to achieve proper normal

|

||||

-- form. The quantity of enclosures is probably going to be low enough, disk

|

||||

-- space is cheap enough, and for the time being we have no SQL queries

|

||||

-- against the enclosure fields to justify a perf difference.

|

||||

authors TEXT,

|

||||

enclosures TEXT,

|

||||

FOREIGN KEY(feed_id) REFERENCES feeds(id)

|

||||

);

|

||||

CREATE INDEX IF NOT EXISTS index_news_id on news(id);

|

||||

CREATE INDEX IF NOT EXISTS index_news_feed_id on news(feed_id);

|

||||

|

||||

-- Not used very often, but when you switch a feed's isolate_guids setting on

|

||||

-- and off, we need to rewrite the rss_guid for all news items from that feed,

|

||||

-- so having an index there really helps.

|

||||

CREATE INDEX IF NOT EXISTS index_news_original_feed_id on news(original_feed_id);

|

||||

|

||||

-- This will be the most commonly used search index. We search for news that is

|

||||

-- not read or recycled, ordered by published desc, and belongs to one of

|

||||

-- several feeds (feed or folder of feeds).

|

||||

CREATE INDEX IF NOT EXISTS index_news_recycled_read_published_feed_id on news(recycled, read, published, feed_id);

|

||||

|

||||

-- Less common but same idea. Finding read + unread news that's not recycled,

|

||||

-- published desc, from your feed or folder.

|

||||

CREATE INDEX IF NOT EXISTS index_news_recycled_published_feed_id on news(recycled, published, feed_id);

|

||||

|

||||

-- Used to figure out which incoming news is new and which already exist.

|

||||

CREATE INDEX IF NOT EXISTS index_news_guid on news(rss_guid);

|

||||

----------------------------------------------------------------------------------------------------

|

||||

|

||||

----------------------------------------------------------------------------------------------------

|

||||

CREATE TABLE IF NOT EXISTS feed_filter_rel(

|

||||

feed_id INT NOT NULL,

|

||||

filter_id INT NOT NULL,

|

||||

order_rank INT NOT NULL,

|

||||

FOREIGN KEY(feed_id) REFERENCES feeds(id),

|

||||

FOREIGN KEY(filter_id) REFERENCES filters(id),

|

||||

PRIMARY KEY(feed_id, filter_id)

|

||||

);

|

||||

----------------------------------------------------------------------------------------------------

|

||||

COMMIT;

|

||||

'''

|

||||

SQL_COLUMNS = sqlhelpers.extract_table_column_map(DB_INIT)

|

||||

SQL_INDEX = sqlhelpers.reverse_table_column_map(SQL_COLUMNS)

|

||||

|

||||

DEFAULT_DATADIR = '_bringrss'

|

||||

DEFAULT_DBNAME = 'bringrss.db'

|

||||

|

||||

# Normally I don't even put version numbers on my projects, but since we're

|

||||

# making requests to third parties its fair for them to know in case our HTTP

|

||||

# behavior changes.

|

||||

VERSION = '0.0.1'

|

||||

http_session = requests.Session()

|

||||

http_session.headers['User-Agent'] = f'BringRSS v{VERSION} github.com/voussoir/bringrss'

|

||||

|

||||

# Thank you h-j-13

|

||||

# https://stackoverflow.com/a/54629675/5430534

|

||||

DATEUTIL_TZINFOS = {

|

||||

'A': 1 * 3600,

|

||||

'ACDT': 10.5 * 3600,

|

||||

'ACST': 9.5 * 3600,

|

||||

'ACT': -5 * 3600,

|

||||

'ACWST': 8.75 * 3600,

|

||||

'ADT': 4 * 3600,

|

||||

'AEDT': 11 * 3600,

|

||||

'AEST': 10 * 3600,

|

||||

'AET': 10 * 3600,

|

||||

'AFT': 4.5 * 3600,

|

||||

'AKDT': -8 * 3600,

|

||||

'AKST': -9 * 3600,

|

||||

'ALMT': 6 * 3600,

|

||||

'AMST': -3 * 3600,

|

||||

'AMT': -4 * 3600,

|

||||

'ANAST': 12 * 3600,

|

||||

'ANAT': 12 * 3600,

|

||||

'AQTT': 5 * 3600,

|

||||

'ART': -3 * 3600,

|

||||

'AST': 3 * 3600,

|

||||

'AT': -4 * 3600,

|

||||

'AWDT': 9 * 3600,

|

||||

'AWST': 8 * 3600,

|

||||

'AZOST': 0 * 3600,

|

||||

'AZOT': -1 * 3600,

|

||||

'AZST': 5 * 3600,

|

||||

'AZT': 4 * 3600,

|

||||

'AoE': -12 * 3600,

|

||||

'B': 2 * 3600,

|

||||

'BNT': 8 * 3600,

|

||||

'BOT': -4 * 3600,

|

||||

'BRST': -2 * 3600,

|

||||

'BRT': -3 * 3600,

|

||||

'BST': 6 * 3600,

|

||||

'BTT': 6 * 3600,

|

||||

'C': 3 * 3600,

|

||||

'CAST': 8 * 3600,

|

||||

'CAT': 2 * 3600,

|

||||

'CCT': 6.5 * 3600,

|

||||

'CDT': -5 * 3600,

|

||||

'CEST': 2 * 3600,

|

||||

'CET': 1 * 3600,

|

||||

'CHADT': 13.75 * 3600,

|

||||

'CHAST': 12.75 * 3600,

|

||||

'CHOST': 9 * 3600,

|

||||

'CHOT': 8 * 3600,

|

||||

'CHUT': 10 * 3600,

|

||||

'CIDST': -4 * 3600,

|

||||

'CIST': -5 * 3600,

|

||||

'CKT': -10 * 3600,

|

||||

'CLST': -3 * 3600,

|

||||

'CLT': -4 * 3600,

|

||||

'COT': -5 * 3600,

|

||||

'CST': -6 * 3600,

|

||||

'CT': -6 * 3600,

|

||||

'CVT': -1 * 3600,

|

||||

'CXT': 7 * 3600,

|

||||

'ChST': 10 * 3600,

|

||||

'D': 4 * 3600,

|

||||

'DAVT': 7 * 3600,

|

||||

'DDUT': 10 * 3600,

|

||||

'E': 5 * 3600,

|

||||

'EASST': -5 * 3600,

|

||||

'EAST': -6 * 3600,

|

||||

'EAT': 3 * 3600,

|

||||

'ECT': -5 * 3600,

|

||||

'EDT': -4 * 3600,

|

||||

'EEST': 3 * 3600,

|

||||

'EET': 2 * 3600,

|

||||

'EGST': 0 * 3600,

|

||||

'EGT': -1 * 3600,

|

||||

'EST': -5 * 3600,

|

||||

'ET': -5 * 3600,

|

||||

'F': 6 * 3600,

|

||||

'FET': 3 * 3600,

|

||||

'FJST': 13 * 3600,

|

||||

'FJT': 12 * 3600,

|

||||

'FKST': -3 * 3600,

|

||||

'FKT': -4 * 3600,

|

||||

'FNT': -2 * 3600,

|

||||

'G': 7 * 3600,

|

||||

'GALT': -6 * 3600,

|

||||

'GAMT': -9 * 3600,

|

||||

'GET': 4 * 3600,

|

||||

'GFT': -3 * 3600,

|

||||

'GILT': 12 * 3600,

|

||||

'GMT': 0 * 3600,

|

||||

'GST': 4 * 3600,

|

||||

'GYT': -4 * 3600,

|

||||

'H': 8 * 3600,

|

||||

'HDT': -9 * 3600,

|

||||

'HKT': 8 * 3600,

|

||||

'HOVST': 8 * 3600,

|

||||

'HOVT': 7 * 3600,

|

||||

'HST': -10 * 3600,

|

||||

'I': 9 * 3600,

|

||||

'ICT': 7 * 3600,

|

||||

'IDT': 3 * 3600,

|

||||

'IOT': 6 * 3600,

|

||||

'IRDT': 4.5 * 3600,

|

||||

'IRKST': 9 * 3600,

|

||||

'IRKT': 8 * 3600,

|

||||

'IRST': 3.5 * 3600,

|

||||

'IST': 5.5 * 3600,

|

||||

'JST': 9 * 3600,

|

||||

'K': 10 * 3600,

|

||||

'KGT': 6 * 3600,

|

||||

'KOST': 11 * 3600,

|

||||

'KRAST': 8 * 3600,

|

||||

'KRAT': 7 * 3600,

|

||||

'KST': 9 * 3600,

|

||||

'KUYT': 4 * 3600,

|

||||

'L': 11 * 3600,

|

||||

'LHDT': 11 * 3600,

|

||||

'LHST': 10.5 * 3600,

|

||||

'LINT': 14 * 3600,

|

||||

'M': 12 * 3600,

|

||||

'MAGST': 12 * 3600,

|

||||

'MAGT': 11 * 3600,

|

||||

'MART': 9.5 * 3600,

|

||||

'MAWT': 5 * 3600,

|

||||

'MDT': -6 * 3600,

|

||||

'MHT': 12 * 3600,

|

||||

'MMT': 6.5 * 3600,

|

||||

'MSD': 4 * 3600,

|

||||

'MSK': 3 * 3600,

|

||||

'MST': -7 * 3600,

|

||||

'MT': -7 * 3600,

|

||||

'MUT': 4 * 3600,

|

||||

'MVT': 5 * 3600,

|

||||

'MYT': 8 * 3600,

|

||||

'N': -1 * 3600,

|

||||

'NCT': 11 * 3600,

|

||||

'NDT': 2.5 * 3600,

|

||||

'NFT': 11 * 3600,

|

||||

'NOVST': 7 * 3600,

|

||||

'NOVT': 7 * 3600,

|

||||

'NPT': 5.5 * 3600,

|

||||

'NRT': 12 * 3600,

|

||||

'NST': 3.5 * 3600,

|

||||

'NUT': -11 * 3600,

|

||||

'NZDT': 13 * 3600,

|

||||

'NZST': 12 * 3600,

|

||||

'O': -2 * 3600,

|

||||

'OMSST': 7 * 3600,

|

||||

'OMST': 6 * 3600,

|

||||

'ORAT': 5 * 3600,

|

||||

'P': -3 * 3600,

|

||||

'PDT': -7 * 3600,

|

||||

'PET': -5 * 3600,

|

||||

'PETST': 12 * 3600,

|

||||

'PETT': 12 * 3600,

|

||||

'PGT': 10 * 3600,

|

||||

'PHOT': 13 * 3600,

|

||||

'PHT': 8 * 3600,

|

||||

'PKT': 5 * 3600,

|

||||

'PMDT': -2 * 3600,

|

||||

'PMST': -3 * 3600,

|

||||

'PONT': 11 * 3600,

|

||||

'PST': -8 * 3600,

|

||||

'PT': -8 * 3600,

|

||||

'PWT': 9 * 3600,

|

||||

'PYST': -3 * 3600,

|

||||

'PYT': -4 * 3600,

|

||||

'Q': -4 * 3600,

|

||||

'QYZT': 6 * 3600,

|

||||

'R': -5 * 3600,

|

||||

'RET': 4 * 3600,

|

||||

'ROTT': -3 * 3600,

|

||||

'S': -6 * 3600,

|

||||

'SAKT': 11 * 3600,

|

||||

'SAMT': 4 * 3600,

|

||||

'SAST': 2 * 3600,

|

||||

'SBT': 11 * 3600,

|

||||

'SCT': 4 * 3600,

|

||||

'SGT': 8 * 3600,

|

||||

'SRET': 11 * 3600,

|

||||

'SRT': -3 * 3600,

|

||||

'SST': -11 * 3600,

|

||||

'SYOT': 3 * 3600,

|

||||

'T': -7 * 3600,

|

||||

'TAHT': -10 * 3600,

|

||||

'TFT': 5 * 3600,

|

||||

'TJT': 5 * 3600,

|

||||

'TKT': 13 * 3600,

|

||||

'TLT': 9 * 3600,

|

||||

'TMT': 5 * 3600,

|

||||

'TOST': 14 * 3600,

|

||||

'TOT': 13 * 3600,

|

||||

'TRT': 3 * 3600,

|

||||

'TVT': 12 * 3600,

|

||||

'U': -8 * 3600,

|

||||

'ULAST': 9 * 3600,

|

||||

'ULAT': 8 * 3600,

|

||||

'UTC': 0 * 3600,

|

||||

'UYST': -2 * 3600,

|

||||

'UYT': -3 * 3600,

|

||||

'UZT': 5 * 3600,

|

||||

'V': -9 * 3600,

|

||||

'VET': -4 * 3600,

|

||||

'VLAST': 11 * 3600,

|

||||

'VLAT': 10 * 3600,

|

||||

'VOST': 6 * 3600,

|

||||

'VUT': 11 * 3600,

|

||||

'W': -10 * 3600,

|

||||

'WAKT': 12 * 3600,

|

||||

'WARST': -3 * 3600,

|

||||

'WAST': 2 * 3600,

|

||||

'WAT': 1 * 3600,

|

||||

'WEST': 1 * 3600,

|

||||

'WET': 0 * 3600,

|

||||

'WFT': 12 * 3600,

|

||||

'WGST': -2 * 3600,

|

||||

'WGT': -3 * 3600,

|

||||

'WIB': 7 * 3600,

|

||||

'WIT': 9 * 3600,

|

||||

'WITA': 8 * 3600,

|

||||

'WST': 14 * 3600,

|

||||

'WT': 0 * 3600,

|

||||

'X': -11 * 3600,

|

||||

'Y': -12 * 3600,

|

||||

'YAKST': 10 * 3600,

|

||||

'YAKT': 9 * 3600,

|

||||

'YAPT': 10 * 3600,

|

||||

'YEKST': 6 * 3600,

|

||||

'YEKT': 5 * 3600,

|

||||

'Z': 0 * 3600,

|

||||

}

|

||||

148

bringrss/exceptions.py

Normal file

148

bringrss/exceptions.py

Normal file

|

|

@ -0,0 +1,148 @@

|

|||

from voussoirkit import stringtools

|

||||

|

||||

class ErrorTypeAdder(type):

|

||||

'''

|

||||

During definition, the Exception class will automatically receive a class

|

||||

attribute called `error_type` which is just the class's name as a string

|

||||

in the loudsnake casing style. NoSuchFeed -> NO_SUCH_FEED.

|

||||

|

||||

This is used for serialization of the exception object and should

|

||||

basically act as a status code when displaying the error to the user.

|

||||

|

||||

Thanks Unutbu

|

||||

http://stackoverflow.com/a/18126678

|

||||

'''

|

||||

def __init__(cls, name, bases, clsdict):

|

||||

type.__init__(cls, name, bases, clsdict)

|

||||

cls.error_type = stringtools.pascal_to_loudsnakes(name)

|

||||

|

||||

class BringException(Exception, metaclass=ErrorTypeAdder):

|

||||

'''

|

||||

Base type for all of the BringRSS exceptions.

|

||||

Subtypes should have a class attribute `error_message`. The error message

|

||||

may contain {format} strings which will be formatted using the

|

||||

Exception's constructor arguments.

|

||||

'''

|

||||

error_message = ''

|

||||

|

||||

def __init__(self, *args, **kwargs):

|

||||

super().__init__()

|

||||

self.given_args = args

|

||||

self.given_kwargs = kwargs

|

||||

self.error_message = self.error_message.format(*args, **kwargs)

|

||||

self.args = (self.error_message, args, kwargs)

|

||||

|

||||

def __str__(self):

|

||||

return f'{self.error_type}: {self.error_message}'

|

||||

|

||||

def jsonify(self):

|

||||

j = {

|

||||

'type': 'error',

|

||||

'error_type': self.error_type,

|

||||

'error_message': self.error_message,

|

||||

}

|

||||

return j

|

||||

|

||||

# NO SUCH ##########################################################################################

|

||||

|

||||

class NoSuch(BringException):

|

||||

pass

|

||||

|

||||

class NoSuchFeed(NoSuch):

|

||||

error_message = 'Feed "{}" does not exist.'

|

||||

|

||||

class NoSuchFilter(NoSuch):

|

||||

error_message = 'Filter "{}" does not exist.'

|

||||

|

||||

class NoSuchNews(NoSuch):

|

||||

error_message = 'News "{}" does not exist.'

|

||||

|

||||

# XML PARSING ERRORS ###############################################################################

|

||||

|

||||

class BadXML(BringException):

|

||||

error_message = '{}'

|

||||

|

||||

class NeitherAtomNorRSS(BadXML):

|

||||

error_message = '{}'

|

||||

|

||||

class NoGUID(BadXML):

|

||||

error_message = '{}'

|

||||

|

||||

# FEED ERRORS ######################################################################################

|

||||

|

||||

class HTTPError(BringException):

|

||||

error_message = '{}'

|

||||

|

||||

class InvalidHTTPHeaders(BringException):

|

||||

error_message = '{}'

|

||||

|

||||

# FILTER ERRORS ####################################################################################

|

||||

|

||||

class FeedStillInUse(BringException):

|

||||

error_message = 'Cannot delete {feed} because it is used by {filters}.'

|

||||

|

||||

class FilterStillInUse(BringException):

|

||||

error_message = 'Cannot delete {filter} because it is used by feeds {feeds}.'

|

||||

|

||||

class InvalidFilter(BringException):

|

||||

error_message = '{}'

|

||||

|

||||

class InvalidFilterAction(InvalidFilter):

|

||||

error_message = '{}'

|

||||

|

||||

class InvalidFilterCondition(InvalidFilter):

|

||||

error_message = '{}'

|

||||

|

||||

# GENERAL ERRORS ###################################################################################

|

||||

|

||||

class BadDataDirectory(BringException):

|

||||

'''

|

||||

Raised by BringDB __init__ if the requested data_directory is invalid.

|

||||

'''

|

||||

error_message = 'Bad data directory "{}"'

|

||||

|

||||

OUTOFDATE = '''

|

||||

Database is out of date. {existing} should be {new}.

|

||||

Please run utilities\\database_upgrader.py "{filepath.absolute_path}"

|

||||

'''.strip()

|

||||

class DatabaseOutOfDate(BringException):

|

||||

'''

|

||||

Raised by BringDB __init__ if the user's database is behind.

|

||||

'''

|

||||

error_message = OUTOFDATE

|

||||

|

||||

class NoClosestBringDB(BringException):

|

||||

'''

|

||||

For calls to BringDB.closest_photodb where none exists between cwd and

|

||||

drive root.

|

||||

'''

|

||||

error_message = 'There is no BringDB in "{}" or its parents.'

|

||||

|

||||

class NotExclusive(BringException):

|

||||

'''

|

||||

For when two or more mutually exclusive actions have been requested.

|

||||

'''

|

||||

error_message = 'One and only one of {} must be passed.'

|

||||

|

||||

class OrderByBadColumn(BringException):

|

||||

'''

|

||||

For when the user tries to orderby a column that does not exist or is

|

||||

not allowed.

|

||||

'''

|

||||

error_message = '"{column}" is not a sortable column.'

|

||||

|

||||

class OrderByBadDirection(BringException):

|

||||

'''

|

||||

For when the user tries to orderby a direction that is not asc or desc.

|

||||

'''

|

||||

error_message = 'You can\'t order "{column}" by "{direction}". Should be asc or desc.'

|

||||

|

||||

class OrderByInvalid(BringException):

|

||||

'''

|

||||

For when the orderby request cannot be parsed into column and direction.

|

||||

For example, it contains too many hyphens like a-b-c.

|

||||

|

||||

If the column and direction can be parsed but are invalid, use

|

||||

OrderByBadColumn or OrderByBadDirection

|

||||

'''

|

||||

error_message = 'Invalid orderby request "{request}".'

|

||||

163

bringrss/helpers.py

Normal file

163

bringrss/helpers.py

Normal file

|

|

@ -0,0 +1,163 @@

|

|||

import bs4

|

||||

import datetime

|

||||

import dateutil.parser

|

||||

import importlib

|

||||

import sys

|

||||

|

||||

from . import constants

|

||||

|

||||

from voussoirkit import cacheclass

|

||||

from voussoirkit import httperrors

|

||||

from voussoirkit import pathclass

|

||||

from voussoirkit import vlogging

|

||||

|

||||

log = vlogging.get_logger(__name__)

|